A research team led by Prof. LU Xiaoqiang from Xi'an Institute of Optics and Precision Mechanics (XIOPM) of the Chinese Academy of Sciences (CAS) proposed a novel mutual attention inception network (MAIN) and a dataset named RSIVQA for remote sensing visual question answering. The results were published in IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING.

Remote sensing visual question answering (VQA) mainly aims at making semantic understanding of remote sensing images (RSIs) objective and interactive. Specifically, given an RSI, an intelligent agent will answer a question about the remote sensing scene.

Most of the existing methods ignore the spatial information of RSIs and word-level semantic information of questions which restricts their applications in many complex scenes.

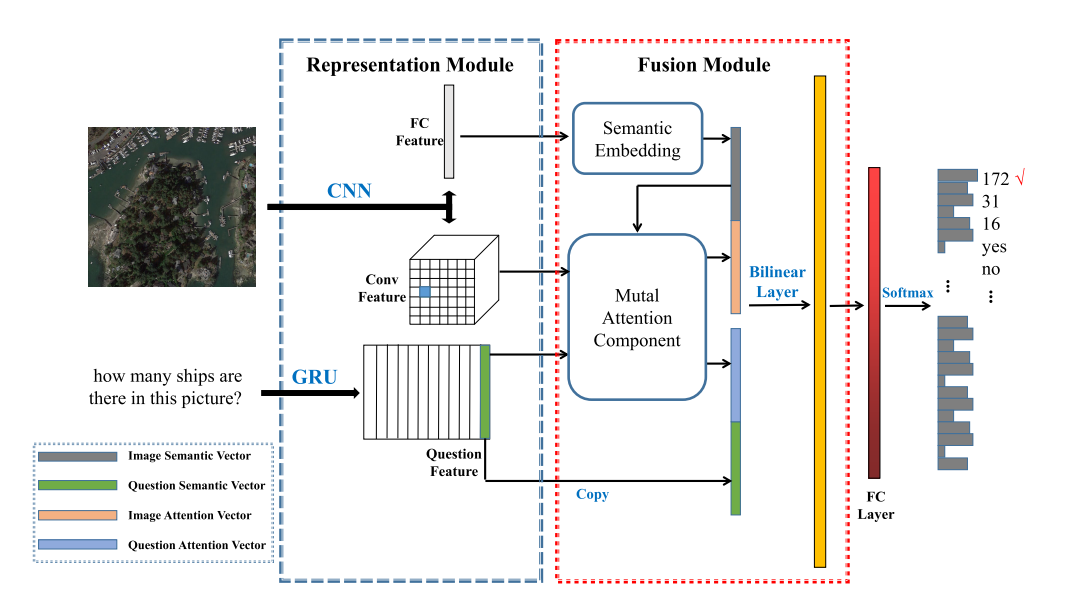

To address this problem, the proposed MAIN was made up of two parts, including the representation module and the fusion module. The representation module was devised to obtain the features of image and question which can provide better representations. As for the fusion module, it enhanced the discriminative ability of representations which can acquire correct answers by reinforcing the representations of image and question.

According to the experiments results, the proposed method can capture the alignments between images and questions under different evaluation metrics. Moreover, this article provides a new perspective for the remote sensing visual question answering.

Figure 1 Overview of the proposed method. (Image by XIOPM)

Download: