Multi-source image fusion means combining and complementing the advantages of different image types to obtain a better result.

Specifically, long-wave infrared(thermal) images are insensitive to brightness and can discriminate objects and background by distinguish different thermal radiation. They are short of spatial resolution and texture details which are the advantages of RGB images. In contrast, quality of RGB images is sensitive to light conditions.

Based on this observation, an inference can be stated that fusion of the two sources may generate a new image with clear objects and high resolution, which satisfy requirements in all-weather and all-day/night monitoring.

To prove the above inference, a student named LIU Luolin from Xi'an Institute of Optics and Precision Mechanics (XIOPM) of the Chinese Academy of Sciences (CAS) proposes a two-stream end-to-end model named TSFNet for thermal and visible image fusion. The results were published in Neurocomputing.

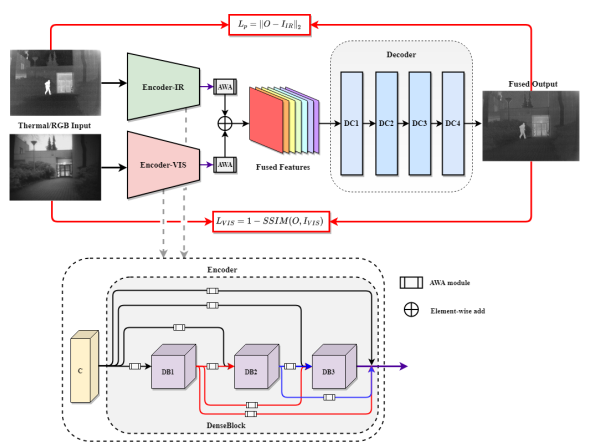

Figure 1. Illustration of the proposed network structure (Image by XIOPM)

The proposed TSFNet method employs two branches for feature learning as shown in Figure1. Accordingly, the whole framework is disassembled into three modules, feature extraction, fusion, and reconstruction. To get better performance, TSFNet fully captures the information which is different from the existing methods. With the adaptive weight allocation strategy, the model can retain the detailed information from corresponding images autonomously. Experiments on public datasets demonstrate that TSFNet outperforms state-of-the-art methods under different evaluation metrics. In the future, the TSFNet will provide a guides for designing new network of image fusion.

Download: