A research team led by Prof. Dr. LU Xiaoqiang from Xi'an Institute of Optics and Precision Mechanics (XIOPM) of the Chinese Academy of Sciences (CAS) propose a novel method for unsupervised learning of human action categories in still images.

In contrast to previous methods, the proposed method explores distinctive information of actions directly from unlabeled image databases, attempting to learn discriminative deep representations in an unsupervised manner to distinguish different actions.

In the proposed method, action image collections can be used without manual annotations. Specifically, to deal with the problem that unsupervised discriminative deep representations are difficult to learn, the proposed method builds a training dataset with surrogate labels from the unlabeled dataset.

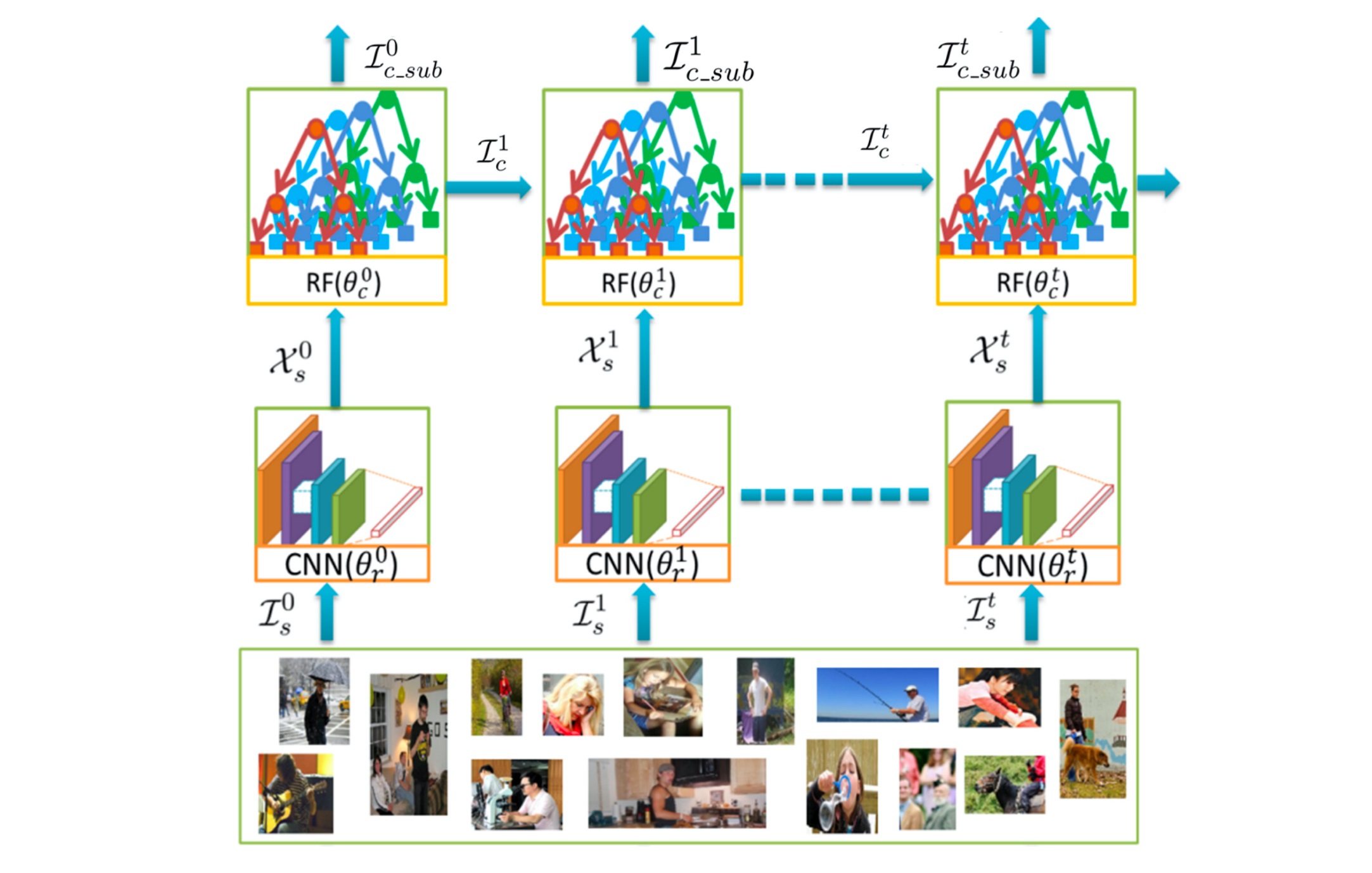

Then learns discriminative representations by alternately updating convolutional neural network (CNN) parameters and the surrogate training dataset in an iterative manner; to explore the discriminatory information among different action categories, training batches for updating the CNN parameters are built with triplet groups and the triplet loss function is introduced to update the CNN parameters.

Furthermore, to learn more discriminative deep representations, a Random Forest classifier is adopted to update the surrogate training dataset, and more beneficial triplet groups then can be built with the updated surrogate training dataset.

Extensive experiments on four benchmark datasets demonstrate the effectiveness of the proposed method.

The diagram of the proposed method. (Image by XIOPM)

The diagram of the proposed method. (Image by XIOPM)

(Original research article " ACM TRANSACTIONS ON MULTIMEDIA COMPUTING,COMMUNICATIONS, AND APPLICATIONS (2019) https://doi.org/10.1145/3362161 ")

Download: