Infrared and visible image fusion has turned into a research hotspot, because the fusion result can comprehensively preserve the thermal objects and background details from different sensors, which exhibit great prospects in human visual perception, military applications, remote sensing, target recognition and tracking.

Several novel methods have been proposed to simplify the processes of image fusion, including Multi-scale decomposition-based fusion methods, utilizations of quadtree decomposition and Bezier interpolation, structure-transferring fusion method and convolutional neural network.

However, a critical problem has been neglected. In most cases, the visible image quality is easily affected by imaging devices or weather conditions. A severe operation environment can easily prevent the important detail and texture information of visible images from being displayed. Are there any methods to address the defect?

A research team led by Prof. Dr. REN long from Xi'an Institute of Optics and Precision Mechanics (XIOPM) of the Chinese Academy of Sciences (CAS) propose a novel image fusion method based on decomposition and division-based strategy. The results were published in Infrared Physics and Technology.

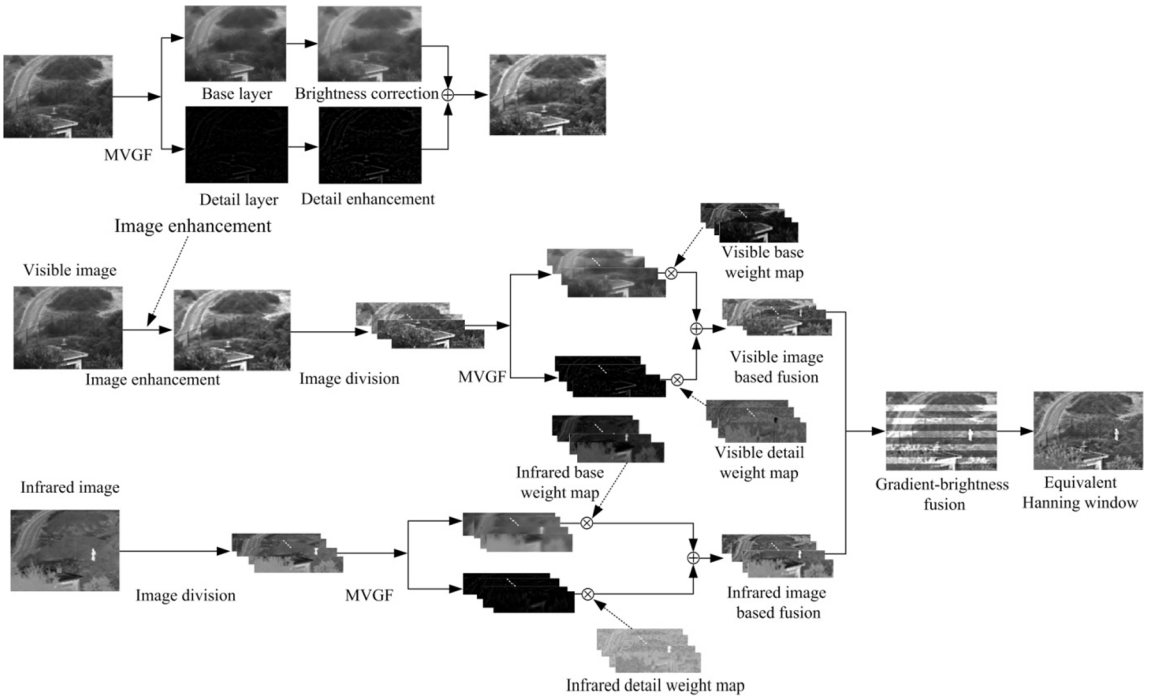

The schematic diagram of the proposed infrared and visible image fusion framework. (Image by XIOPM)

The proposed new image fusion method is an edge preserving filter called “weighted variance guided filter” based on the traditional guided filter, as well as a novel algorithm used for visible image contrast enhancement before image fusion. The improved guided filter takes the gradient and weighted variance in the local window into account.

The fusion in the proposed method is executed by two different strategies, one takes the sub infrared base layer as the main image to get the fusion result, while the other one takes the sub visible base layer as the main image, and two different sub-fusion results are obtained.

The fusion results indicate that the contrast and brightness of the fused images are all greatly improved and enhanced, besides, image edges, texture details are all well preserved as well as integrated successfully. In addition, most objective evaluation indexes indicate that the proposed method is almost superior to the other methods.

The proposed novel image fusion method can significantly improve the image decomposing and artifact restricting around image boundaries and it is particularly suitable for fusion applications at low-contrast conditions.