Fringe projection 3D imaging is a widely used technique for shape measurement. By projecting three or more phase-shifted structured light, typically linear sinusoidal patterns, onto the surface under the measurement, multiple images of the surface with distorted phase-shifted fringes can be captured to estimate the surface shape.

The conventional deep learning phase unwrapping methods are unsuccessful in unwrapping phase in fringe projection 3D imaging due to the special properties in the wrapped phase, unfortunately.

There are two major differences in the wrapped phase map of the fringe projection compared to those of interferometry. The calculated phase is typically wrapped in one-direction, depending on the relative position of the camera and the projector which is difficult to label each segment due to the similarity between the segments. Another key feature in fringe projection is that typically two sets of measurement are needed for high resolution and large depth range 3D imaging. Therefore, a solution is needed to combine the measurement results from the two measurements to obtain an accurate measurement.

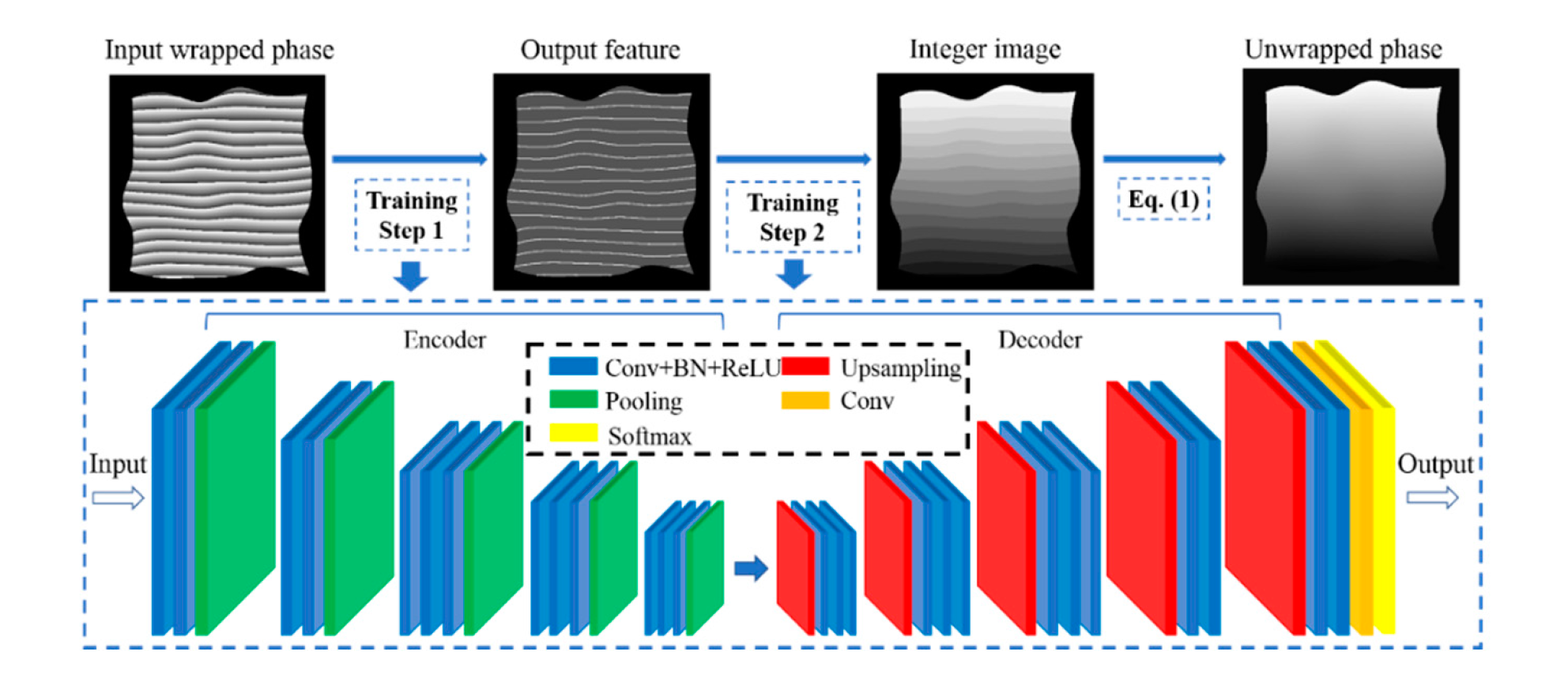

A research team led by Prof. Dr. LIANG Jian from State Key Laboratory of Transient Optics and Photonics, Xi'an Institute of Optics and Precision Mechanics (XIOPM) of the Chinese Academy of Sciences (CAS) proposed a novel phase unwrapping segmentation network to accomplish fringe projection 3D imaging. The Encoder-Decoder strategy is introduced to train the deep neural network. The proposed networks can generate accurate 3D imaging depending on pretty simple reconstruction algorithm which is shown in Fig.1. The results were published in SENSORS.

Fig. 1 The workflow of the proposed method and the network architecture for phase unwrapping (Image by XIOPM)

Fig. 1 The workflow of the proposed method and the network architecture for phase unwrapping (Image by XIOPM)

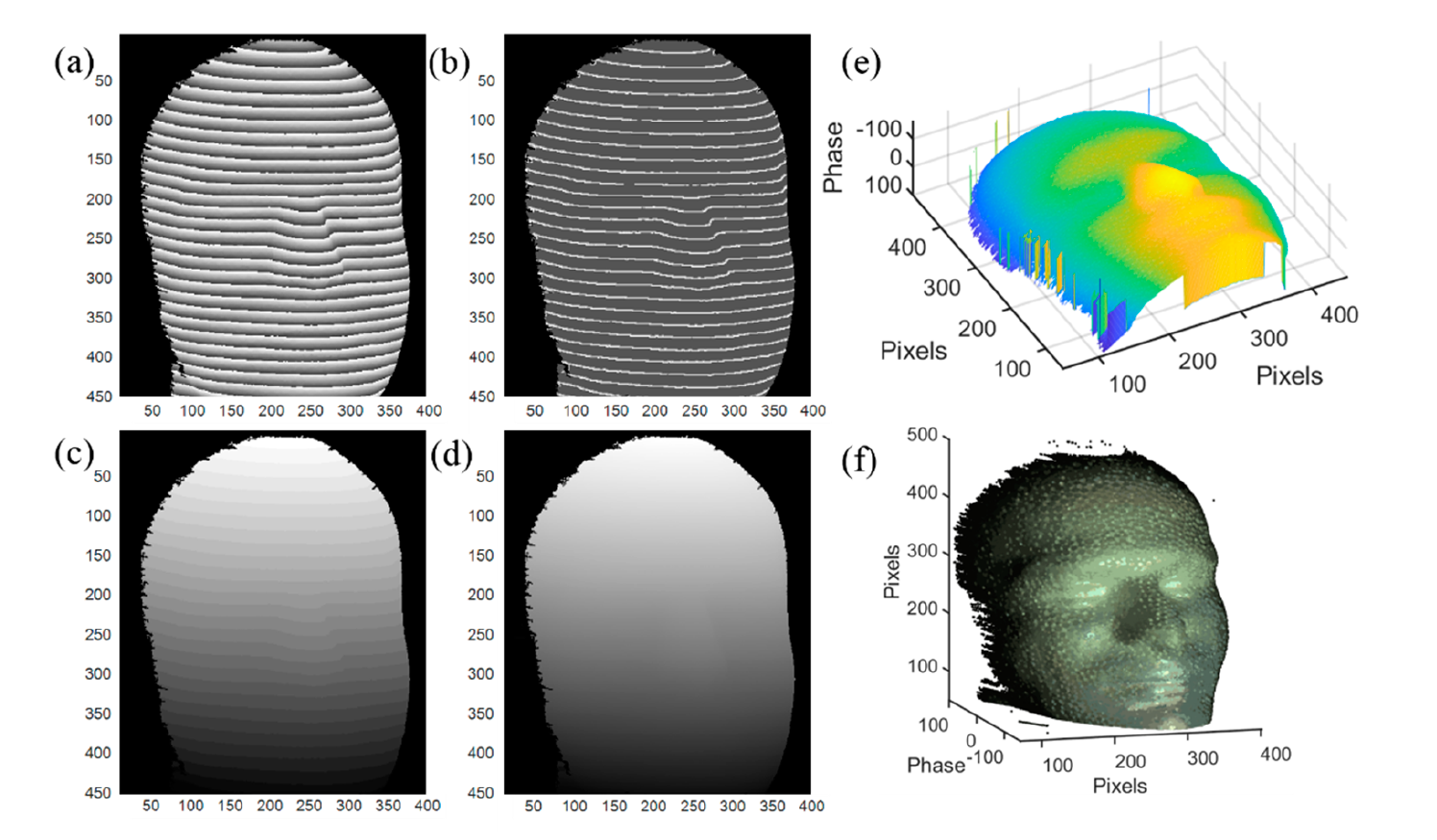

To illustrate that the proposed deep learning phase unwrapping method is still robust when the wrapped phase segments are more than the maximum number of phase segments in the trained neural network, the paper measured a human head model made of foam which is shown in Fig.2. Since the sinusoidal fringe period is smaller, more detail information of the reconstructed image can be obtained.

By utilizing deep neural networks and phase unwrapping method, the paper directly labels the transition edges of the wrapped phase which ensure the accurate segmentation of the wrapped phase with serious Gaussian noise, and simultaneously extend the image size constraint to unlimited.

The paper provided a novel idea in dealing with fringe projection 3D imaging. We believe that the deep neural network based methods may solve more problems of phase unwrapping and 3D imaging in the near future.

Fig. 2 Phase unwrapping and 3D reconstruction of a human head model with thinner sinusoidal stripes (Image by XIOPM)

Fig. 2 Phase unwrapping and 3D reconstruction of a human head model with thinner sinusoidal stripes (Image by XIOPM)