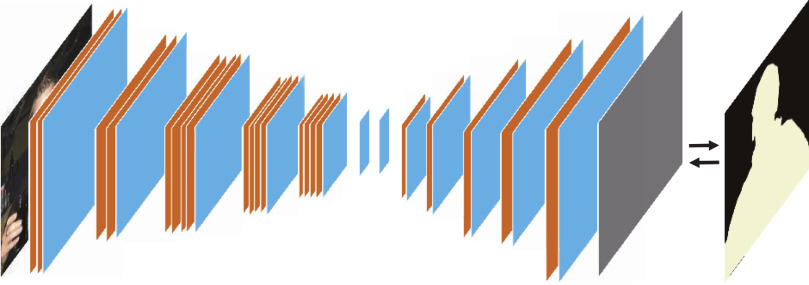

Automatic gender and age prediction has become relevant to an increasing amount of applications, particularly under the rise of social platforms and social media. However, the performances of existing methods on real-world images are still not satisfactory as we expected, especially when compared to that of face recognition. The reason is that, facial images for gender and age prediction have inherent small inter-class and big intra-class differences, i.e., two images with different skin colors and same age category label have big intra-class difference. However, most existing methods have not constructed discriminative representations for digging out these inherent characteristics very well. Xiaoqiang Lu’s research team proposed a method based on muti-stage learning: The first stage is marking the object regions with an encoder-decoder based segmentation network. Specifically, the segmentation network can classify each pixel into two classes, "people" and others, and only the "people" regions are used for the subsequent processing. The second stage is precisely predicting the gender and age information with the proposed prediction network, which encodes global information, local region information and the interactions among different local regions into the final representation, and then finalizes the prediction. Additionally, they evaluated their method on three public and challenging datasets, and the experimental results verify the effectiveness of their proposed method.

(Original research article "Neurocomputing Vol. 334, pp. 114-124 (2019) https://www.sciencedirect.com/science/article/pii/S0925231219300220?via%3Dihub")