In the multicategory object detection task of high-resolution remote sensing images, small objects are always difficult to detect. This happens because the influence of location deviation on small object detection is greater than on large object detection.

The reason is that, with the same intersection decrease between a predicted box and a true box, Intersection over Union (IoU) of small objects drops more than those of large objects.

In order to address this challenge, a research team led by Prof. Dr. LU Xiaoqiang from Xi'an Institute of Optics and Precision Mechanics (XIOPM) of the Chinese Academy of Sciences (CAS) proposed a new localization model to improve the location accuracy of small objects.

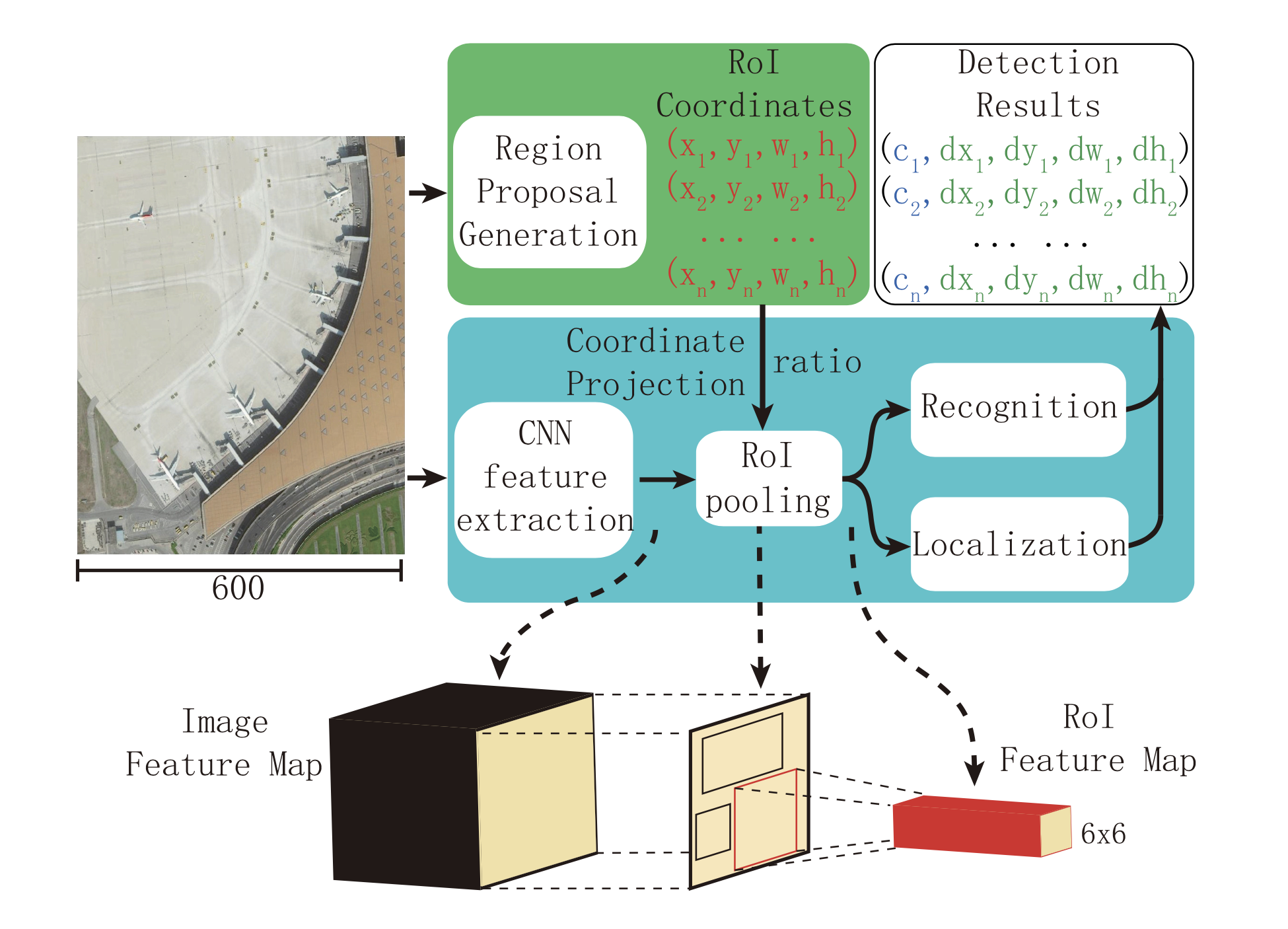

This model is composed of two parts. First, a global feature gating process is proposed to implement a channel attention mechanism on local feature learning. This process takes full advantages of global features’ abundant semantics and local features’ spatial details. In this case, more effective information is selected for small object detection. Second, an axis-concentrated prediction (ACP) process is adopted to project convolutional feature maps into different spatial directions, so as to avoid interference between coordinate axes and improve the location accuracy. Then, coordinate prediction is implemented with a regression layer using the learned object representation.

In our experiments, we explore the relationship between the detection accuracy and the object scale, and the results show that the performance improvements of small objects are distinct using our method. Compared with the classical deep learning detection models, the proposed gated axis-concentrated localization.

over all framework of the detection system. (Image by XIOPM)

over all framework of the detection system. (Image by XIOPM)

(Original research article " IEEE TRANSACTIONS ON GEOSCIENCE AND REMOTE SENSING JANUARY (2020) https://doi.org/10.1109/TGRS.2019.2935177 ")