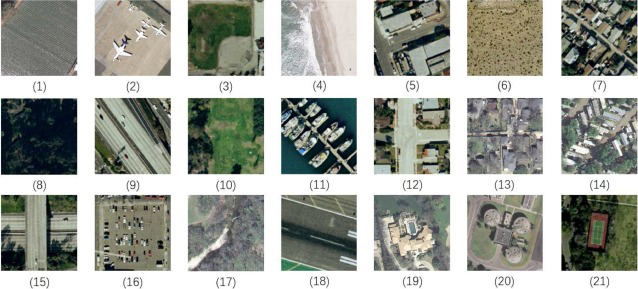

Scene classification has become an effective way to interpret the High Spatial Resolution (HSR) remote sensing images. Recently, Convolutional Neural Networks (CNN) have been found to be excellent for scene classification. However, only using the deep models as feature extractor on the aerial image directly is not proper, because the extracted deep features can not capture spatial scale variability and rotation variability in HSR remote sensing images. To relieve this limitation, Xiaoqiang Lu’s research team investigated a bidirectional adaptive feature fusion strategy to deal with the remote sensing scene classification. The deep learning feature and the SIFT feature are fused together to get a discriminative image presentation. The fused feature can not only describe the scenes effectively by employing deep learning feature but also overcome the scale and rotation variability with the usage of the SIFT feature. By fusing both SIFT feature and global CNN feature, their method achieves state-of-the-art scene classification performances on the UCMerced, the Sydney and the AID datasets.

(Original research article "Neurocomputing Vol. 328, SI, pp. 135-146 (2019) https://www.sciencedirect.com/science/article/pii/S0925231218309470?via%3DihDi")